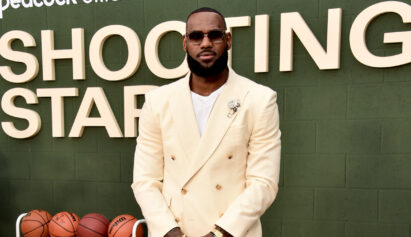

By Dina Bass

Timnit Gebru is one of the leading voices working on ethics in artificial intelligence. Her research has explored ways to combat biases, such as racism and sexism, that creep into AI through flawed data and creators. At Google, she and colleague Margaret Mitchell ran a team focused on the subject—until they tried to publish a paper critical of Google products and were dismissed. (Gebru says Google fired her; the company says she resigned.) Now Gebru, a founder of the affinity group Black in AI, is lining up backers for an independent AI research group. Calls to hold Big Tech accountable for its products and practices, she says, can’t all be made from inside the house.

What can we do right now to make AI more fair—less likely to disadvantage Black Americans and other groups in everything from mortgage lending to criminal sentencing?

The baseline is labor protection and whistleblower protection and anti-discrimination laws. Anything we do without that kind of protection is fundamentally going to be superficial, because the moment you push a little bit, the company’s going to come down hard. Those who push the most are always going to be people in specific communities who have experienced some of these issues.

What are the big, systemic ways that AI needs to be reconceived in the long term?

We have to reimagine, what are the goals? If the goal is to make maximum money for Google or Amazon, no matter what we do it’s going to be just a Band-Aid. There’s this assumption in the industry that hey, we’re doing things at scale, everything is automated, we obviously can’t guarantee safety. How can we moderate every single thing that people write on social media? We can randomly flag your content as unsafe or we can have all sorts of misinformation—how do you expect us to handle that?

That’s how they’re acting, like they can just make as much money as they want from products that are extremely unsafe. They need to be forced not to do that.

What might that look like?

Let’s look at cars. You’re not allowed to just sell a car and be like hey, we’re selling millions of cars, so we can’t guarantee the safety of each one. Or, we’re selling cars all over the world, so there’s no place you can go to complain that there’s an issue with your car, even if it spontaneously combusts or sends you into a ditch. They’re held to much higher standards, and they have to spend a lot more, proportionately, on safety.

What, specifically, should government do?

Products have to be regulated. Government agencies’ jobs should be expanded to investigate and audit these companies, and there should be standards that have to be followed if you’re going to use AI in high-stakes scenarios. Right now, government agencies themselves are using highly unregulated products when they shouldn’t. They’re using Google Translate when vetting refugees.

As an immigrant [Gebru is Eritrean and fled Ethiopia in her teens, during a war between the two countries], how do you feel about U.S. tech companies vying to sell AI to the Pentagon or Immigration and Customs Enforcement?

People have to speak up and say no. We can decide that what we should be spending our energy and money on is how not to have California burning because of climate change and how to have safety nets for people, improve our health, food security. For me, migration is a human right. You’re leaving an unsafe place. If I wasn’t able to migrate, I don’t know where I would be.

Was your desire to form an independent venture driven by your experience at Google?

One hundred percent. There’s no way I could go to another large tech company and do that again. Whatever you do, you’re not going to have complete freedom—you’ll be muzzled in one way or another, but at least you can have some diversity in how you’re muzzled.

Does anything give you hope about increasing diversity in your field? The labor organizing at Google and Apple?

All of the affinity groups—Queer in AI, Black in AI, Latinx in AI, Indigenous AI—they have created networks among themselves and among each other. I think that’s promising and the labor organizing, in my view, is extremely promising. But companies will have to be forced to change. They would rather fire people like me than have any minuscule amount of change.

More stories like this are available on bloomberg.com